It is always very sad to end reading a great book, but Isaascon’s beautifully finishes his with Ada Lovelace considerations (during the 19th century!) about the role of computers. “Ada might also be justified in boasting that she was correct, at least thus far, in her more controversial contention that no computer, no matter how powerful would ever truly be a “thinking” machine. A century after she died, Alan Turing dubbed the “Lady Lovelace’s Objection” and tried to dismiss it by providing an operational definition of a thinking machine. […] But it’s now been more than sixty years, and the machines that attempt to fool people on the test are at best engaging in lame conversation tricks rather than actual thinking. Certainly none has cleared Ada’s higher bar of being able to “originate” any thoughts of its own. […] Artificial intelligence enthusiasts have long been promising, or threatening, that machines like HAL would soon merge and prove Ada wrong. Such was the promise of the 1956 conference at Dartmouth organized by John McCarthy and Marvin Minsky, where the field of artificial intelligence was launched. The conference concluded that a breakthrough was about twenty years away. It wasn’t.” [Page 468]

Ada, Countess of Lovelace, 1840

John von Neumann realized that the architecture of the human brain is fundamentally different. Digital computers deal in precise units, whereas the brain, to the extent we understand it, is also partly an analog system which deals with a continuum of possibilities, […] not just binary yes-no data but also answers such as “maybe” and “probably” and infinite other nuances, including occasional bafflement. Von Neumann suggested that the future of intelligent computing might require abandoning the purely digital approach and creating “mixed procedures”. [Page 469]

“Artifical Intelligence”

Discussion about artificial intelligence flared up a bit, at least in the popular press, after IBM’s Deep Blue, a chess-playing machine beat the world champion Garry Kasparov in 1997 and then Watson, its natural-language question-answering computer won at Jeopardy! But […] these were not breakthroughs of human-like artificial intelligence, as IBM’s CEO was first to admit. Deep Blue won by brute force. […To] one question about the “anatomical oddity” of the former Olympic gymnast George Eyer, Watson answered “What is a leg?” The correct answer was that Eyer was missing a leg. The problem was understanding “oddity”, explained David Ferruci, who ran the Watson project at IBM. “The computer wouldn’t know that a missing leg is odder than anything else.” […]

“Watson did not understand the questions, nor its answers, nor that some of its answers were right and some wrong, nor that it was playing a game, nor that it won – because it doesn’t understand anything, according to John Searle [a Berkeley philosophy professor]. “Computers today are brilliant idiots” John E. Kelly III, IBM’s director of research. “These recent achievements have, ironically, underscored the limitations of computer science and artificial intelligence.” Professor Tomaso Poggio, director of the Center of Brain, Minds, and Machines at MIT. “We do not yet understand how the brain gives rise to intelligence, nor do we know how to build machines that are as broadly intelligent as we are.” Ask Google “Can a crocodile play basketball?” and it will have no clue, even though a toddler could tell you, after a bit of giggling. [Pages 470-71] I tried the question on Google and guess what. It gave me the extract by Isaacson…

The human brain not only combines analog and digital processes, it also is a distributed system, like the Internet, rather than a centralized one. […] It took scientists forty years to map the neurological activity of the one-millimeter long roundworm, which has 302 neurons and 8,000 synapses. The human brain has 86 billion neurons and up to 150 trillion synapses. […] IBM and Qualcomm each disclosed plans to build “neuromorphic”, or brain-like, computer processors, and a European research consortium called the Human Brain project announced that it had built a neuromorphic microchip that incorporated “fifty million plastic synapses and 200,000 biologically realistic neuron models on a single 8-inch silicon wafer. […] These latest advances may even lead to the “Singularity” a term that von Neumann coined and the futurist Ray Kurzweil and the science fiction writer Vernor Vinge popularized, which is sometimes used to describe the moment when computers are not only smarter than humans but also can design themselves to be even supersmarter, and will thus no longer need us mortals. Isaacon is wiser than I am (as I feel that these ideas are stupid) when he adds: “We can leave the debate to the futurists. Indeed depending on your definition of consciousness, it may never happen. We can leave “that” debate to the philosophers and theologians. “Human ingenuity” wrote Leonardo da Vinci “will never devise any inventions more beautiful, nor more simple, nor more to the purpose than Nature does”. [Pages 472-74]

Computers as a Complement to Humans

Isaacson adds: “There is however another possibility, one that Ada Lovelace would like. Machines would not replace humans but would instead become their partners. What humans would bring is originality and creativity” [page 475]. After explaining that in a 2005 chess tournament, “the final winner was not a grandmaster nor a state-of-the-art computer, not even a combination of both, but two Americans amateurs who used three computers at the same time and knew how to manage the process of collaborating with their machines” (page 476) and that “in order to be useful, the IBM team realized [Watson] needed to interact [with humans] in a manner that made collaboration pleasant” (page 477) Isaacson further speculates:

Let us assume, for example, that a machine someday exhibits all of the mental capabilitie of a human: giving the outward appearance of recognizing patterns, perceiving emotions, appreciating beauty, creating art, having desires, forming moral values, and pursuing goals. Such a machine might be able to pass a Turing test. It might even pass we could call the Ada test, which is that it could appear to “originate” its own thoughts that go beyond what we humans program it to do. There would, however, be still another hurdle before we could say that artificial intelligence has triumphed over augmented intelligence. We call it the Licklider Test. It would go beyong asking whether a machine could replicate all the components of human intelligence to ask whether the machine accomplishes these tasks better when whirring away completely on its own or when working in conjunction with humans. In other words, is it possible that humans and machines working in partnership will be indefinitely more powerful than an artificial intelligence machine working alone? If so, then “man-computer symbiosis,” as Licklider called it, will remain triumphant. Artificial Intelligence need not be the holy grail of computing. The goal instead could be to find ways to olptimize the collaboration between human and machine capabilities – to forge a èartnership in which we let the machines do what they do best, and they let us do what we do best. [Pages 478-79]

Ada’s Poetical Science

At his last such apperance, for the iPad2 in 2011, Steve Jobs declared: “It’s in Apple’s DNA that technology alone is not enough – that it’s technology married with liberal arts, married with the humanities, that yields us the result that makes our heat sing”. The converse to this paean to the humanities, however, is also true. People who love the arts and humanities should endeavor to appreciate the beauties of math and physics, just as Ada did. Otherwise they will be left at the intersection of arts and science, where most digital-age creativity will occur. They will surrender control of that territory to the engineers. Many people who celebrate the arts and the humanities, who applaud vigorously the tributes to their importance in our schools, will proclaim without shame (and sometimes even joke) that they don’t understand math or physics. They extoll the virtues of learning Latin, but they are clueless about how to write an algorithm or tell BASIC from C++, Python from Pascal. They consider people who don’t know Hamlet from Macbeth to be Philistines, yet they might merrily admit that they don’t know the difference between a gene and a chromosome, or a transistor and a capacitor, or an integral and a differential equation. These concepts may seem difficult. Yes, but so, too, is Hamlet. And like Hamlet, each of these concepts is beautiful. Like an elegant mathematical equation, they are expressions of the glories of the universe. [Pages 486-87]

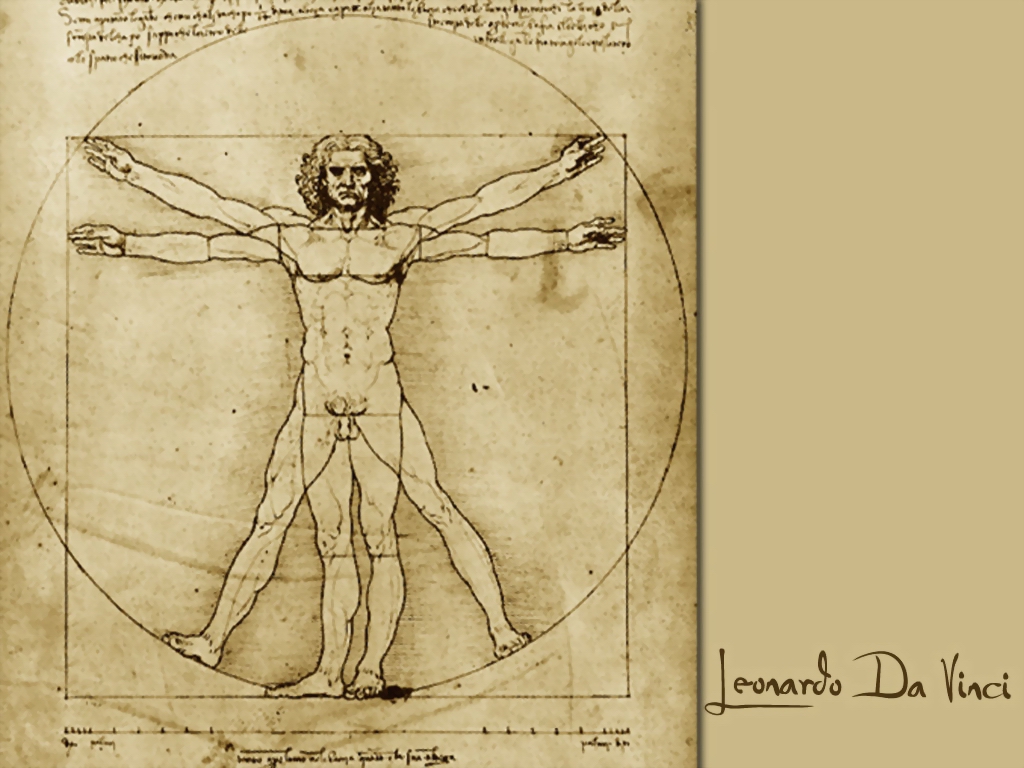

Issacson’s book last page presents Vinci’s Vitruvian Man, 1492